Stable Diffusion 3 Early Preview: Technical Overview

Stable Diffusion 3 (SD3) introduces a suite of technical enhancements that elevate its performance in AI-driven art generation. Key improvements include a shift to a diffusion transformer architecture, which provides finer control over image synthesis. This architecture is complemented by flow matching techniques that enhance the model’s ability to interpret and visualize complex prompts.

The model’s parameter range has been expanded, now offering configurations from 800 million to 8 billion parameters, catering to a wide array of devices and use cases. SD3 also boasts significant advancements in natural language processing, resulting in more accurate translations of textual prompts into visual outputs.

In terms of hardware, SD3 requires a robust GPU setup to operate at full capacity, with specific requirements varying based on the desired model size and performance goals. The model’s training process has been optimized for efficiency, allowing for faster adaptation to new datasets and customization.

Stability AI continues to prioritize responsible AI development, implementing safeguards to mitigate potential misuse of the technology.

Table of Contents

Enhanced Architecture and Image Quality

Stable Diffusion 3 (SD3) marks a significant departure from its predecessors by adopting a diffusion transformer architecture. This change addresses the limitations of the previous U-Net based models, particularly in handling high-resolution images and complex compositions. The new architecture enables SD3 to generate images with greater detail and fidelity, making it possible to create artwork that rivals the nuances of human-created visuals.

The flow matching techniques integrated into SD3 further refine the model’s performance. These techniques allow for more natural transitions between image elements, reducing artifacts and improving the overall cohesiveness of the generated images. As a result, users can expect outputs that are not only visually appealing but also more accurate representations of their prompts.

In terms of image quality, SD3 sets a new standard with its ability to produce images that are sharp, clear, and vibrant. The model’s enhanced color rendering and shading capabilities contribute to this leap in quality, offering creators a wider palette to express their visions.

Advancements in Natural Language Processing

A pivotal upgrade in Stable Diffusion 3 is its enhanced natural language processing (NLP) capabilities. The model now employs a more sophisticated approach to understanding and interpreting prompts, allowing for a nuanced translation of textual descriptions into visual elements.

The improvements in NLP are twofold:

- Prompt Precision: SD3’s ability to parse and comprehend complex prompts has been significantly refined. This means that the subtleties and intricacies of language are better captured, leading to images that more accurately reflect the creator’s intent.

- Textual Fidelity: The model demonstrates a marked improvement in generating and incorporating text within images. Whether it’s a street sign in a cityscape or the title on a book cover, SD3 handles text with a new level of clarity and relevance.

These advancements are not just technical feats; they represent a leap towards a more intuitive interaction between the creator and the AI. With SD3, the dialogue between human imagination and machine execution becomes more seamless, opening up a broader spectrum of creative possibilities.

Comparative Analysis: SD3 vs. Other AI Art Tools

When evaluating Stable Diffusion 3 (SD3) against other AI art tools, such as Midjourney, several factors come into play:

- Image Quality: SD3’s new architecture allows for higher resolution and more detailed images. This is particularly evident when comparing the output to other tools that may produce lower resolution or less detailed images.

- User Experience: SD3 offers a more intuitive user interface, with streamlined processes for inputting prompts and customizing outputs. This contrasts with some tools that might have steeper learning curves or less user-friendly interfaces.

- Customization and Control: With SD3, users have greater control over the image generation process, including the ability to fine-tune aspects such as style, composition, and color. Other tools may offer less granularity in customization options.

- Technology and Innovation: SD3’s diffusion transformer architecture represents the latest in AI art technology, providing a unique approach to image generation. This may differ from the technologies employed by other tools, which could be based on older or less advanced models.

- Community and Support: Stability AI has fostered a strong community around SD3, offering extensive support and resources for users. This level of community engagement can be a significant advantage over other tools that may not have as active a user base or support network.

Training and Customizing Stable Diffusion 3

Training Stable Diffusion 3 (SD3) is a streamlined process, designed to be accessible for both novice users and experienced machine learning practitioners. Here’s what sets SD3 apart in terms of training and customization:

- Ease of Training: SD3 can be trained on a diverse range of datasets, including custom datasets tailored to specific artistic styles or content. The model’s architecture facilitates faster learning, meaning it can adapt to new data more quickly than previous versions.

- Customization: Users have the ability to fine-tune SD3 to their specific needs. Whether it’s adjusting the level of detail in images or the style of visual output, SD3 provides a flexible framework for personalization.

- Data Requirements: While SD3 is capable of producing high-quality images with smaller datasets, optimal training results are achieved with larger, more varied datasets. This ensures the model can capture a wide spectrum of styles and subjects.

- Model Adaptability: SD3’s architecture is designed to be adaptable, allowing it to scale up or down based on the available hardware and desired output quality. This makes it suitable for a range of applications, from mobile devices to high-end servers.

- Support and Documentation: Stability AI provides comprehensive documentation and support to guide users through the training process. This includes best practices, troubleshooting tips, and community forums for peer assistance. [Research Paper]

The combination of these factors makes SD3 a robust tool for those looking to push the boundaries of AI-generated art, providing both the simplicity for beginners and the depth for experts to explore and innovate.

SD3 Hardware Requirements for Optimal Performance

The hardware requirements for running Stable Diffusion 3 (SD3) efficiently are an essential consideration for users looking to leverage the full potential of the model. Here’s what you need to know:

- GPU Requirements: SD3 is optimized for NVIDIA GPUs with Tensor Cores, such as the A100 or V100 series. These GPUs accelerate the model’s performance, especially when dealing with large parameter configurations.

- System Memory: Adequate system memory is crucial, with a minimum of 16 GB RAM recommended. For larger models or more complex image generation tasks, 32 GB or more may be necessary.

- Storage Space: Fast SSD storage is recommended for quicker data access and improved overall performance. A minimum of 1 TB is advisable to accommodate the model’s weights and the datasets used for training.

- Processing Power: A modern multi-core CPU will ensure that the system can handle the demands of SD3, particularly for tasks that involve preprocessing of data or postprocessing of images.

- Cooling Solutions: Given the intensive nature of AI model training and image generation, an effective cooling solution is essential to maintain system stability and prevent thermal throttling.

- Power Supply: A reliable and high-wattage power supply is important, especially if running multiple GPUs, to ensure consistent performance without power-related interruptions.

By meeting these hardware specifications, users can expect a smooth and efficient experience with SD3, whether they are creating art, training new models, or experimenting with different styles and prompts.

You can learn more about the System Requirements below:

Stable Diffusion, one of the most popular AI art-generation tools, offers impressive results but demands a robust system. Whether you’re a creative artist or an enthusiast, understanding the System Requirements for Stable Diffusion is important for efficient and smooth operation. In this comprehensive guide, we’ll go deep into the specifics of running Stable Diffusion effectively,…

Real-World Applications and Creative Possibilities

Stable Diffusion 3 (SD3) opens up a world of creative possibilities across various industries. Here are some of the real-world applications where SD3 can make a significant impact:

- Digital Art and Illustration: Artists can use SD3 to generate intricate and unique pieces of art, pushing the boundaries of digital creativity.

- Game Development: Game designers can leverage SD3 to create detailed character models, environments, and textures, speeding up the development process.

- Film and Animation: SD3 can assist in storyboarding, concept art creation, and even generating animation frames, offering a new tool for filmmakers and animators.

- Advertising and Marketing: Marketers can use SD3 to quickly produce visual content for campaigns, social media posts, and more, tailored to their target audience.

- Education and Research: Educators and researchers can utilize SD3 to visualize complex concepts, historical events, or future scenarios, enhancing learning experiences.

- Fashion and Design: Fashion designers can explore new patterns, textures, and designs, using SD3 to visualize clothing and accessories before physical production.

- Architecture and Urban Planning: Architects and urban planners can generate renderings of buildings and cityscapes, aiding in the visualization of projects.

Each of these applications demonstrates the versatility of SD3, showcasing its potential to revolutionize the way we think about and interact with visual content.

Safety and Ethics: Navigating the AI Responsibly

As the capabilities of AI art generation tools like Stable Diffusion 3 (SD3) expand, so does the importance of using them responsibly. Stability AI is committed to ethical AI development and has implemented several measures to ensure SD3 is used in a manner that aligns with these values.

- Content Moderation: SD3 includes content moderation features to prevent the generation of harmful or inappropriate imagery. This is crucial in maintaining ethical standards and respecting community guidelines.

- User Guidelines: Clear guidelines are provided to users, outlining the responsible use of SD3. These guidelines help prevent misuse and encourage creativity within ethical boundaries.

- Transparency: Stability AI aims for transparency in its development process, providing insights into the model’s training data and decision-making processes. This helps users understand the limitations and capabilities of SD3.

- Collaboration with Experts: Stability AI collaborates with experts in AI ethics to continuously improve the safety features of SD3. This includes addressing potential biases and ensuring the model’s outputs are fair and unbiased.

- Community Feedback: An active feedback loop with the user community allows for the identification and resolution of ethical concerns. Users can report issues, and Stability AI responds by updating the model and its policies accordingly.

By prioritizing safety and ethics, Stability AI ensures that SD3 not only pushes the envelope in AI art generation but also does so with a commitment to the well-being of individuals and society.

Comprehensive Feature List of Stable Diffusion 3

Stable Diffusion 3 (SD3) is packed with a range of features designed to enhance the text-to-image generation process. Here’s a detailed list of its capabilities:

- Diffusion Transformer Architecture: Utilizes a novel architecture for improved image synthesis.

- Flow Matching: Ensures smooth transitions and cohesiveness in generated images.

- Scalable Model Sizes: Ranges from 800 million to 8 billion parameters, offering flexibility for various use cases.

- Enhanced Multi-Subject Prompt Performance: Greatly improved ability to handle prompts involving multiple subjects.

- Superior Image Quality: Generates higher resolution and more detailed images compared to previous versions.

- Advanced Spelling Abilities: Better text generation within images, including accurate spelling and placement.

- Emphasis Markers: Gives users more control over the emphasis of certain elements in the image.

- Negative Prompts: Allows users to specify what the model should avoid, refining the output.

- Inpainting and Outpainting Abilities: Offers tools for editing parts of an image or extending it beyond its original borders.

- Prompt Precision: Enhanced natural language processing for more accurate prompt-to-image translations.

- Textual Fidelity: Improved text handling within images for clearer and more relevant text incorporation.

- Safety Measures: Includes safeguards to prevent misuse by bad actors and ensure responsible AI practices.

- Community Engagement: Active collaboration with researchers, experts, and the community for continuous improvement.

- Adaptable Solutions: Provides a flexible framework for individuals, developers, and enterprises to explore creativity.

- Transparency and Ethics: Commitment to ethical AI development with insights into training data and model decisions.

- User Guidelines and Support: Comprehensive documentation and community forums for guidance and troubleshooting.

This extensive feature set positions SD3 as a versatile and powerful tool for creators looking to explore the frontiers of AI-generated art.

Getting Started with Stable Diffusion 3

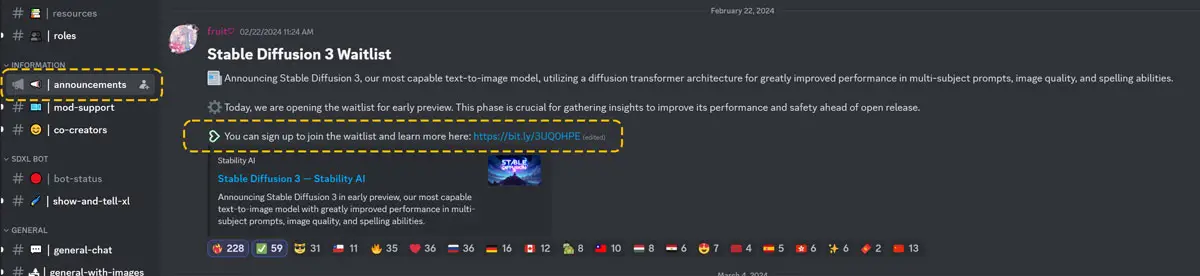

For those eager to dive in and start creating with SD3, here’s how you can get your hands on it:

Currently, SD3 is in an early preview phase, and access is by invitation only. To get a chance to use SD3, you’ll need to join the waitlist. Stability AI is using this period to gather feedback and refine the model before making it broadly available.

Once you’re on the waitlist, keep an eye on your email for an invitation to access the platform. In the meantime, you can prepare by ensuring your hardware meets the requirements and familiarizing yourself with the existing Stable Diffusion models and community resources.

Stability AI also provides comprehensive documentation and support, which will be invaluable once you start using SD3. And don’t forget to join the community discussions on platforms like Discord to stay updated on the latest news and tips from other users.

You can sign up to join the waitlist and learn more here: https://bit.ly/3UQ0HPE

You can sign up to join the waitlist and learn more here: https://bit.ly/3UQ0HPE

Leave a Reply